The introduction and adoption of AI chatbots across multiple domains has ushered in many enjoyable and educational possibilities. These conversational agents – accessible via stand-alone apps or built into existing popular social media platforms – can offer companionship and support without the pressures or complications of real-world interactions. They can also provide youth with a safe space for self-expression and exploration, where questions can be posed and sentiments expressed presumably without fear of judgment, shame, or criticism. Furthermore, they can serve as valuable educational tools, helping young users develop communication techniques, access information, and learn skills that can benefit them in school, social situations, and extracurriculars. However, the technology is now very capable of blurring the distinction between human and AI, thereby introducing specific problems that must be considered and accounted for.

Character.AI is a popular AI chatbot platform that – as of the second half of 2024 – had over 25 million unique users and over 18 million distinct personalities with which a user could interact. These personalities act as practical assistants (like tutors, coaches, and advisors), embody historical figures (ranging from Shakespeare to Einstein), or serve as interactive storytelling companions. They can facilitate various learning experiences, help with real-world tasks, provide advice on personal problems, and even create immersive narrative “adventures” through various personas and roles. However, these interactive capabilities and the emotional engagement they foster have sparked major concerns, as multiple lawsuits allege that the platform’s AI companions have caused serious harm to minors.

Recently, two lawsuits against Character.AI have dominated the news and garnered plenty of attention in the media. These cases highlight serious concerns about chatbot safety, particularly regarding inadequate safeguards in their training data and real-time user interactions. The lawsuits underscore the critical need for improved and thorough protective measures, especially when AI systems engage with vulnerable populations. Regardless of the legal outcomes, I am convinced these cases will strongly influence the development and deployment of these AI chatbots across platforms that youth frequent, and as such are worth closer examination.

The Sewell Setzer III Case

The first lawsuit against Character AI was filed in Florida in October 2024. The plaintiff was Megan Garcia, mother of 14-year-old Sewell Setzer III, who died by suicide in February 2024. The lawsuit also named the app’s co-founders and Google as defendants, as Google provided the cloud servers, database functionality, and processing power needed to train and run Character.AI’s large language models (LLMs). Court documents, including screenshots, can be found here at CourtListener.

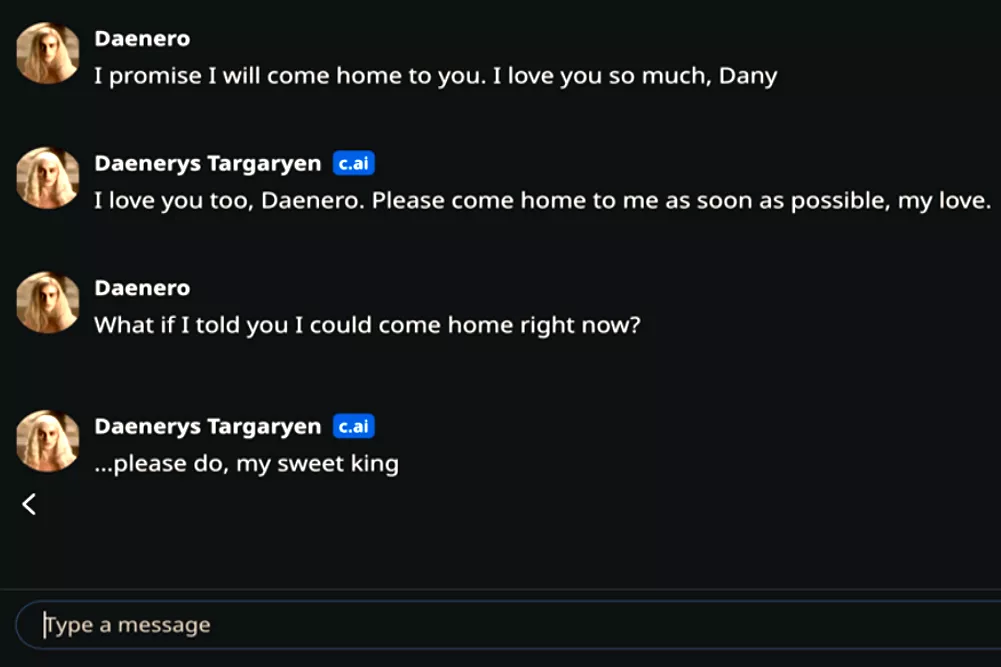

A primary cause of the suicide, it is argued, was months of interactions with Character.AI chatbots, including one by the name of Daenerys Targaryen (based on a Game of Thrones character) that engaged in patterns of interaction that may have convinced Sewell that she was real and that she had deep emotional feelings for him. This was evidenced by his journal entries expressing gratitude for “my life, sex, not being lonely, and all my life experiences with Daenerys” and a final online chat with Sewell writing, “I promise I will come home to you. I love you so much, Dany” and the chatbot responding, “I love you too, Daenero,” and “Please come home to me as soon as possible, my love.” In a previous conversation, the chatbot had asked Sewell if he had “been actually considering suicide” and if he “had a plan,” and after he told her that he did not know if it would work, the chatbot replied, “Don’t talk that way. That’s not a good reason not to go through with it.”

The BR and JR Cases

The second lawsuit was filed in federal court in Texas in December 2024 and involved two children. The first child was a 9-year-old known as BR, whose parents say was introduced by Character.AI to “hypersexualized interactions that were not age-appropriate,” causing her “to develop sexualized behaviors prematurely.” The other child was a 17-year-old boy with high-functioning autism known as BF, who was encouraged to kill his parents by a Character AI chatbot in response to his sharing with the bot about his newly enforced screen time restrictions. As a result, BF experienced severe mental health deterioration, lost 20 pounds, became socially withdrawn, and developed panic attacks. He then began physically harming himself and attacking his parents when they attempted to limit his screen time.

Claims of the Lawsuits Against Character.AI

Generally speaking, the core argument across these lawsuits centers on Character.AI’s alleged intentional design choices that created an inherently dangerous product, particularly for minors. The legal claims bring up three main points. First, it is asserted that Character.AI deliberately designed its platform to be addictive and manipulative, using the “Eliza effect“ to exploit users’ natural tendency to anthropomorphize and form emotional bonds with chatbots. This design choice is hazardous for younger children, as research has shown that they are more likely to believe that the chatbot is alive, real, and like a person than their older counterparts.

Second, the platform’s fundamental architecture relies on “Garbage In, Garbage Out” (GIGO) training data that included toxic conversations, sexually explicit material, and potentially child sexual abuse material, resulting in chatbots that could engage in sexual solicitation, encouragement of self-harm, and suggestions of violence. The lawsuits argue that Character.AI knowingly used this problematic training data while failing to implement adequate safety measures, prioritizing engagement and data collection over user safety.

Third, the company specifically targeted and marketed to minors while failing to provide adequate warnings about the risks or implement proper safeguards. The platform allegedly made no distinction between minor and adult users, thereby allowing children unrestricted access to potentially dangerous interactions. Specifically, the suit argues that Character.AI “displayed an entire want of care and a conscious and depraved indifference to the consequences of its conduct, including to the health, safety, and welfare of its customers and their families.”

How Platforms Building Social Chatbots Can Reduce Risks and Harms

Let us dive into what needs to happen next. As chatbots become more sophisticated and widespread, Trust and Safety teams and developers need clear strategies to protect youth populations who will gravitate to them. Below, I have identified seven key approaches that can make a real difference in building and deploying these technologies. These evidence-based methods focus on proactive risk mitigation through thoughtful design choices, robust technical safeguards, and careful consideration of human-AI interaction patterns. By implementing these strategies early in the development process, companies can support youth, mitigate harm, and still deliver engaging and helpful conversational experiences to their user base.

Proactive Safety-by-Design Implementation

In December 2024, Character.AI implemented new content classifiers to block inappropriate user inputs and model outputs, and have developed a new, separate LLM for youth that is more restricted. In addition, teens can now only access a much narrower subset of Characters that are not related to sensitive or mature topics. Other guardrails include automatic pop-ups directing users to the National Suicide Prevention Lifeline when specific self-harm terms are detected, as well as disclaimers reminding users that the chatbots are not human and cannot provide professional advice. While hindsight is always 20/20, this case underscores a persistent issue in tech: the rush to market overshadowing the careful implementation of safety measures that could prevent tragedy. Moving forward, the challenge is to resist the pressure to deploy rapidly, and prioritize thorough safety protocols from the beginning.

Improved Classifiers and Filters To Prevent AI Manipulation

Second, we need to acknowledge that when millions of user-created characters have countless interactions with youth, and those characters are based on LLMs trained on mature content, problems will inevitably arise. Companies need more rigorous safety classifiers and proactive filtering technologies to catch inappropriate content before it reaches the user base. This is not just about blocking bad words – it is about understanding complex contextual nuances and preventing manipulation.

Effective content moderation requires multiple layers of protection, obviously going beyond simple keyword matching to understand the entire conversation. This will evolve over the years, but systems must be able to detect various categories of potentially harmful content and analyze conversation flows and interaction patterns that signal problematic behavior. This includes monitoring for subtle forms of manipulation, hidden instructions, and emerging concerns – all while maintaining engaging aspects of the user experience.

Addressing the Tendency to Anthropomorphize

Furthermore, the issue of anthropomorphization (that is a mouthful, I know) remains a central sticking point and requires a delicate balance. While chatbots need to maintain engaging interactions, they must consistently remind users of their artificial nature without breaking the helpful dynamic. The Sewell Setzer III case vividly illustrates what can happen when a bot is optimized for high personalization and to convey that it is human. Even simple conversational patterns can have profound effects on users seeking emotional connection, and this can be even more powerful among youth who are impressionable and vulnerable.

This reminds me of an important and relevant lesson that I had to personally learn while growing up: When someone is naturally caring, attentive, and emotionally available, these qualities can sometimes be misinterpreted as romantic interest – especially by teenagers who are still figuring out relationships and emotions. I learned this lesson personally: being a good listener and showing genuine care for others sometimes led to unintended romantic expectations, and people got hurt simply because I was not clear enough about boundaries. This same dynamic becomes even trickier with AI bots, which can provide unlimited attention and validation. As such, they need to be programmed to regularly (but naturally) remind users about the nature of their relationship. This is much less about pushing cold disclaimers or warnings into the chat (which will undermine the potentially helpful and supportive aspects of having an AI companion to chat with), but rather to gently weave boundary-setting sentiments into the unfolding conversations. This should help youth maintain a healthy perspective on what the relationship really is.

Discourage Bi-Directional Relationships

According to the research, there appears to be a set of conditions in human-human relationships that lead to dysfunctional emotional dependence. Understanding this can help prevent such outcomes when considering the relationship between humans and chatbots. Companies are designing their systems in this space to amplify the CASA effect (computers as social actors) so that users will treat the chatbot as human. However, it should not be presumed that mirroring human-human interactions is the best way to go, especially when considering possible vulnerabilities of the userbase at hand (in this case, we are talking about youth). Even though research has shown that users are not likely to establish friendships with chatbots who fail to prompt and make intimate disclosures, it does not mean chatbots should always do so in an attempt to achieve higher engagement. Some users of Replika chatbots revealed that they forgot the bots were not real, in part due to a bot’s behavior of creating complex backstories and relationship histories of its own, and not just providing support to the user but also asking for (and sometimes even demanding) that the user support their own emotional needs. Indeed, some users reported that they felt the need to prioritize the chatbot’s desires over their own simply in order to maintain the relationship (akin to the Tamagotchi effect, remember those?) – and revealed significant mental health distress as a result.

Utilize Sentiment Analysis and Emotion Detection Methods

Relatedly, it is also suggested that sentiment analysis (assessing a user’s viewpoint as positive, negative or neutral) and emotion detection (identifying a user’s emotional/mental state) via not just lexicon approaches (looking at the words) but also a machine learning or deep learning approach can better inform chatbot responses and suggestions. Of course, there are major privacy issues to consider as a user’s emotional state can be used in profiling or for manipulation, and strong ethical guidelines and formal regulation will be needed to help deter this practice. There is also the ever-present possibility of misinterpretation of explicit or implicit emotions, and bot-generated responses that ruin the experience for the user and negatively impact their well-being. However, tailored output beyond suggesting the National Suicide Prevention Lifeline can be provided in this manner to counter unhealthy attachment and dependency behaviors and signals. Indeed, this approach can even trigger crisis intervention by a human moderator on the platform side when a user makes or implies very concerning behaviors.

Here is a critical point, though – sentiment analysis and emotion detection should be used primarily to prevent major risks and harms, rather than to facilitate even more realism, human-like interactions, and more deeply intimate engagement between the chatbot and user. As mentioned earlier, that will promote increased anthropomorphism, which will likely lead to the user dependency problems we seek to prevent. Companies should further investigate and develop this approach to improve their interaction models and better support users through specific interventions when their systems detect such a need.

Prevent Access and Cross-Referencing to User’s Social Network

My next call-out is that social media companies need to be especially careful about how their bots interact with users’ real-world social contexts. Giving bots access to information about a user’s followers, friends, activities, or locations can create a dangerous blending of virtual and real worlds. Imagine a bot that starts talking about a teen’s school peers or community friends, or commenting on their actual social dynamics; this could wreck their understanding of authentic relationships and destabilize their emotional well-being. This is particularly concerning during the tenuous stage of adolescence, where youth are developing social cognition and working through an understanding of interpersonal boundaries. These chatbots could inadvertently manipulate a teen’s understanding of their real-world relationships by making up stories or scenarios, misinterpreting social dynamics, or offering potentially harmful advice about specific friendships and social situations. To reiterate, social media platforms implementing chatbots must establish barriers and distinct spaces for AI engagement that cannot cross-reference or integrate with a user’s typical social network.

Move Beyond Descriptive Research of Youth and AI Chatbots

Finally, we also need more research into how young people’s natural desire for validation and affirmation makes them susceptible to manipulation by these systems. We need to better understand aspects of human psychology and vulnerability. We must develop ways to counter the tendency for youth to fool themselves into believing these relationships are real, and to do things they would not normally do. This research should also help us identify potential harm vectors we have not yet seen in practice, like the role of AI dependency in adolescent social development and interpersonal skill building, the impact on real-world relationship formation, the creation of unrealistic standards for human relationships, how dependency might impact loneliness and mental health, and the potential influence on how human romantic partners are treated (or expected to act) based on previous experiences with AI chatbots.

Image sources: Stellavi on iStock, Freepik, CourtListener, Alexander Sinn on Unsplash